Today, businesses rely quite heavily on data while making their business decisions. Due to this very reason, it has become mandatory to manage and orchestrate data workflows. Organizations are struggling to maintain data accuracy, real-time access to data, and collaboration among teams. Traditional data management is, in most cases, unable to build up to any of these needs. Hence, the arrival of DataOps tools. DataOps tools automate, monitor, and optimize data workflows to enable their teams to deliver reliable, high-quality data.

Table of Contents

In this article, we will review the best DataOps tools, including open-source tools, to help you choose the right tool for your data strategy. Whether you are a startup looking for those cost-effective tools or an enterprise looking for enterprise-grade solutions, we’ve got you covered.

What is DataOps?

DataOps or Data Operations are a set of processes, technologies, and practices designed to improve collaboration, automation, and data integration. The key objectives of DataOps are to promote better teaming between data analysts, data engineers, and operations, to automate repetitive tasks to minimize manual error and to deliver high-quality data in real-time for faster decision-making for organizations.

What are DataOps Tools?

DataOps tools are software solutions that enhance and streamline data lifecycle management so that organizations may analyze and manage data in an effective way. These include:

- Automation, i.e., simplifying repetitive tasks for efficiency.

- Enhancing team productivity and cross-functional collaboration.

- Providing real-time visibility into data pipelines and their performance.

Key Features to Look for in DataOps Tools

When selecting a DataOps tool, consider the following essential features:

- Usability: Tools consisting of a minimalistic interface and requiring almost or no coding are practically usable by a wider range of people.

- Integration Capabilities: Go for the tools that can easily blend in with different data sources, as well as data storage and analytics solutions.

- Automation and Scalability: Tools that offer robust automation capabilities can make your work easier and also reduce manual errors. Also, choose a tool that offers high scalability. As data volumes increase, the tool you choose must be able to handle larger amounts of data.

- Real-time Monitoring and Observability: Tools offering monitoring and observability features allow for the resolution of issues in real-time, thus ensuring data is always accurate and available.

- Security and Compliance: Choose tools with built-in security features and tools that are compliant with regulations like GDPR, HIPAA, and SOC2.

Top DataOps Tools for 2025

1.) StreamSets

G2 Rating: 4.0 out of 5 stars (99)

IBM StreamSets is an end-to-end integration platform that is powerful and designed for modern data workflows. It allows users to create real-time data pipelines and also monitor and manage data workflows across hybrid and multi-cloud environments.

Features:

- It offers real-time pipeline execution

- StreamSets also provides smart data flow monitoring feature

- It has support for a wide range of data sources

Pricing: You can connect with the IBM sales team to get a pricing estimate for your specific use case.

Ideal For: It is ideal for large enterprises with complex needs and workflows.

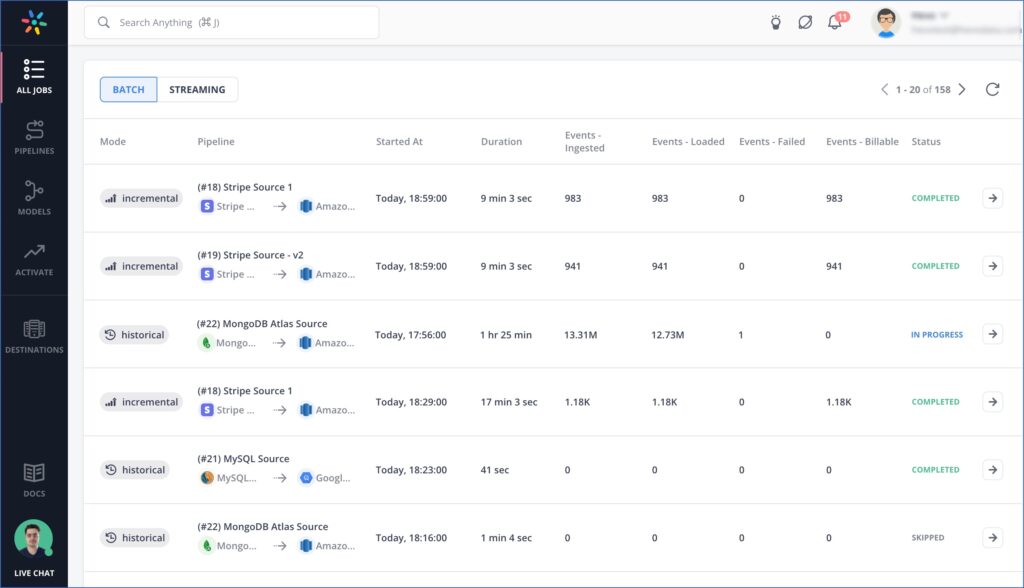

2.) Hevo Data

G2 Rating: 4.3 out of 5 stars (241)

Hevo Data is a no-code data pipeline platform designed to automate data integration. It has an easy-to-use interface and advanced monitoring and transformation capabilities that align with DataOps principles of automation and high data quality. It offers real-time data synchronization and end-to-end encryption for no data loss.

Features:

- No-code user-friendly interface.

- It offers 150+ pre-built connectors for a fully automated data integration process.

- Hevo has a fault-tolerant architecture and is GDPR and HIPAA compliant.

Pricing: Hevo Data pricing starts from $239/month. It offers 4 pricing tiers to meet different needs and requirements. Hevo Data also offers a free pricing tier for moving small amounts of data.

Ideal For: Hevo data is ideal for businesses looking for an easy-to-use, scalable, and highly reliable DataOps tool.

3.) Talend Data Fabric

G2 Rating: 4.4 out of 5 stars (14)

Talend Data Fabric is a comprehensive solution by Talend for data integration, integrity and governance. It simplifies the complex workflows by combining many tools and features into one platform.

Features:

- It supports the full API lifecycle.

- Offers collaborative management features

- It offers data stewardship and catalog features.

Pricing: It offers 4 pricing options: Starter, Standard, Premium, and Enterprise. You can contact their sales team for a quote.

Ideal For: Talend Data Fabric is ideal for organizations requiring advanced data governance and data integration.

4.) Databricks Data Intelligence Platform

G2 Rating: 4.5 out of 5 stars (358)

Databricks is an all-encompassing platform that is capable of combining the functionality of data handling, data analytics, and machine learning. Its highly scalable architecture supports large datasets and complex workflows, enabling teams to work efficiently with big data.

Features:

- Databricks Data Intelligence Platform provides a collaborative workspace.

- It has a highly scalable architecture.

- Offers advanced AI and machine learning capabilities.

Pricing: Pricing depends on usage and infrastructure. Different products have different pricing: Data Engineering starts at $0.15 per DBU.

Ideal For: It is ideal for companies looking to leverage AI and ML along with traditional DataOps.

5.) Alteryx

G2 Rating: 4.6 out of 5 stars (624)

Alteryx is a popular tool for data preparation, blending, and analytics. It has a user-friendly interface that is easy to use. Its AI features automate data analytics to get fast and reliable insights. It allows users to build predictive analytics models without complex coding.

Features:

- Alteryx provides robust data preparation and blending tools.

- It has a drag-and-drop interface, which makes it easy for non-technical users to use.

- It reduces cost and increases efficiency through automation.

Pricing: Alteryx offers two editions: Designer Cloud, which starts at $4,950, and Designer Desktop, which starts at $5,195. For Pricing plans outside the US, you can contact Sales.

Ideal For: Alteryx is ideal for businesses and teams focused on data preparation and advanced analytics.

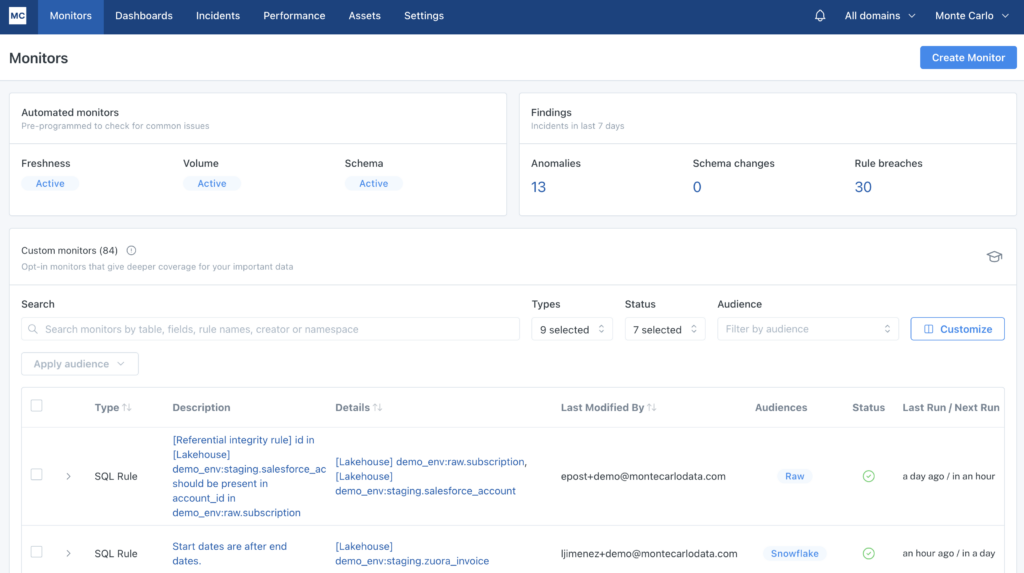

6.) Monte Carlo

G2 Rating: 4.5 out of 5 stars (286)

Monte Carlo is a very well-known data observability platform that is aimed at ensuring data reliability and quality. It allows automated monitoring and alerting to ensure data correctness and no loss of data.

Features:

- Monte Carlo provides an end-to-end data observability feature.

- Its functionalities include anomaly detection and root cause analysis.

- It is user-friendly and has good combination capabilities with other solutions.

Pricing: Monte Carlo offers three pricing plans: Start, Scale, and Enterprise. Using Monte Carlo, you only pay for what you use.

Ideal For: Monte Carlo is ideal for organizations who want to reduce downtime and ensure data reliability.

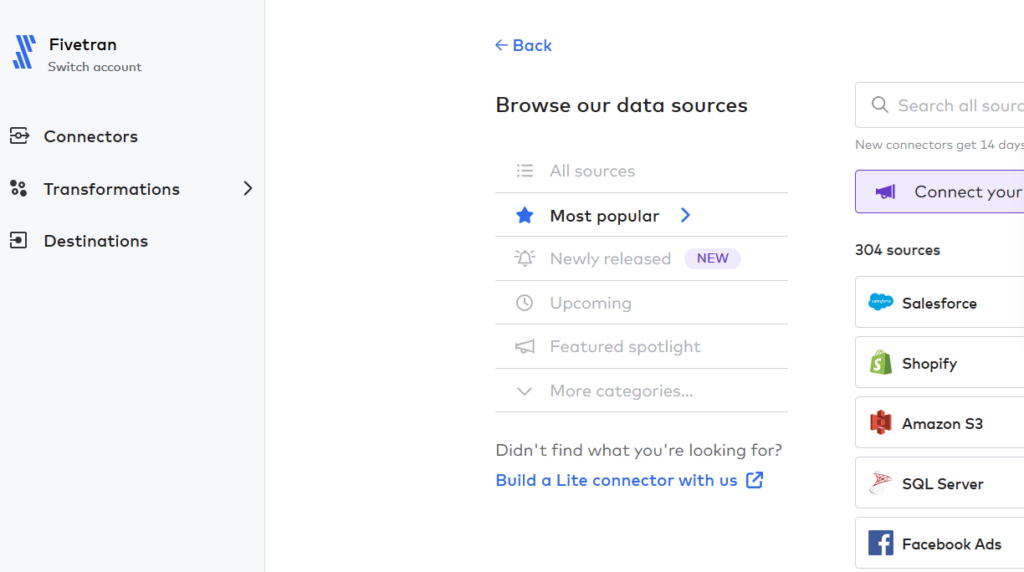

7.) Fivetran

G2 Rating: 4.2 out of 5 stars (406)

Fivetran is another data integration platform on the list. It supports various data sources and destinations. Similar to other data integration tools, Fivetran also automates the ETL process.

Features:

- Fivetran supports 500+ Data Sources

- It automatically adjusts to the Schema changes without any manual effort.

- Offers a custom connector functionality.

Pricing: Fivetran offers 5 different pricing options, with the Standard plan being the most popular. It also offers a free trial.

Ideal For: Fivetran is ideal for Enterprises seeking an automated ETL tool.

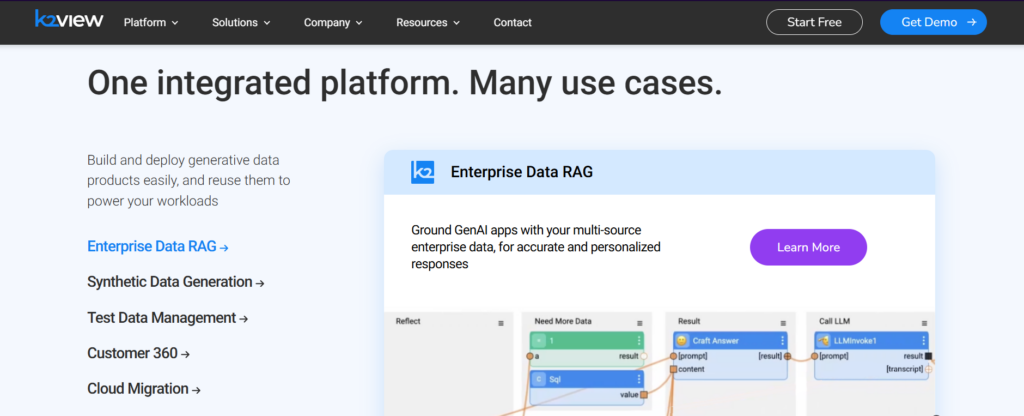

8.) K2View

G2 Rating: 4.4 out of 5 stars (20)

K2View provides a bundle of DataOps tools that helps in real-time data integration, governance, and orchestration. It offers Data Fabric architecture, which ensures data is always fresh and accessible by all teams.

Features:

- K2View provides real-time data delivery, which helps with faster decision-making.

- It also provides enterprise-level data masking to keep your data secure.

- Its Data Fabric architecture ensures integration is smooth and data quality is maintained.

Pricing: K2View offers a transparent pricing model which is simple and easy to understand. It is based on the number of read/write Micro-DB operations each month.

Ideal For: It is ideal for organizations that need real-time data transformation and orchestration.

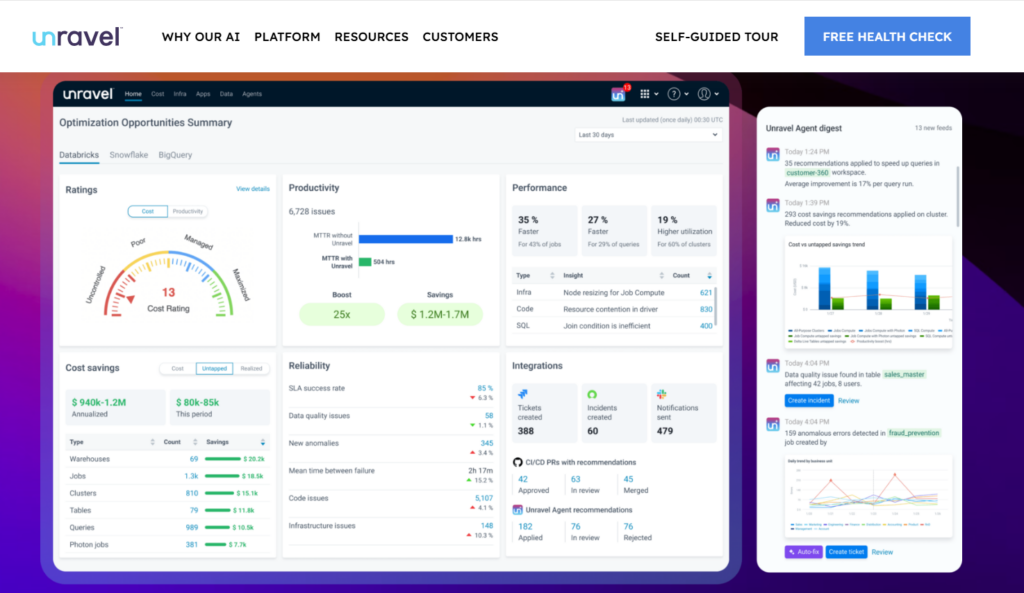

9.) Unravel

G2 Rating: 4.4 out of 5 stars (34)

Unravel is a DataOps tool for observability. It optimizes big data pipelines and applications for efficient performance and cost. Unravel AI automatically finds and fixes issues so that your data workflow can be smooth.

Features:

- Unravel’s advanced AI provides automated performance tuning.

- It also offers features such as anomaly detection and cost analysis.

Pricing: Unravel offers pay-as-you-go pricing based on DBU consumption as well as a custom pricing plan based on enterprise requirements.

Ideal For: You should choose Unravel to optimize big data workloads.

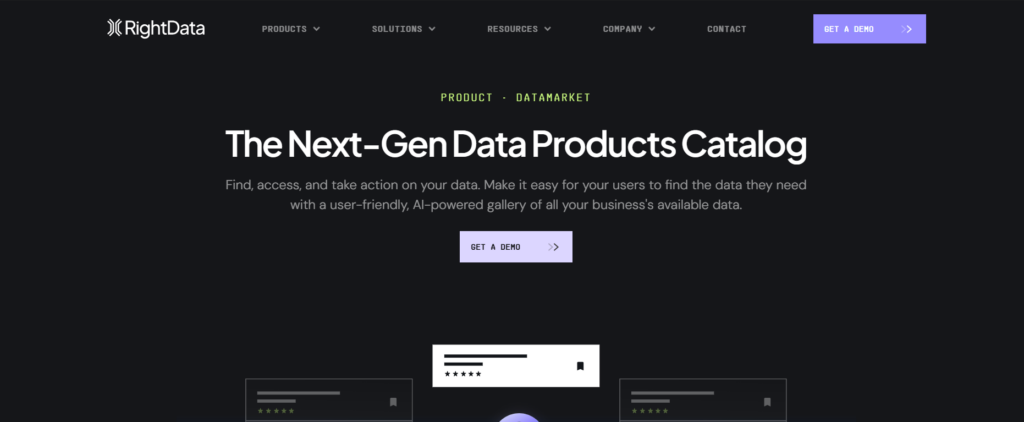

10.) RightData

G2 Rating: 4.3 out of 5 stars (13)

RightData offers various data reliability and quality tools designed specifically to simplify Complex DataOps workflow. It helps improve data accuracy, consistency, and efficiency.

Features:

- It has a user-friendly interface.

- RightData provides services like Data Quality checks and workflow automation.

- It also provides automated performance-tuning suggestions.

Pricing: RightData offers custom pricing, which is available upon request.

Ideal For: RightData is ideal for businesses looking for data testing as well as an orchestration tool.

DataOps Open Source Tools

Top DataOps open source tools are:

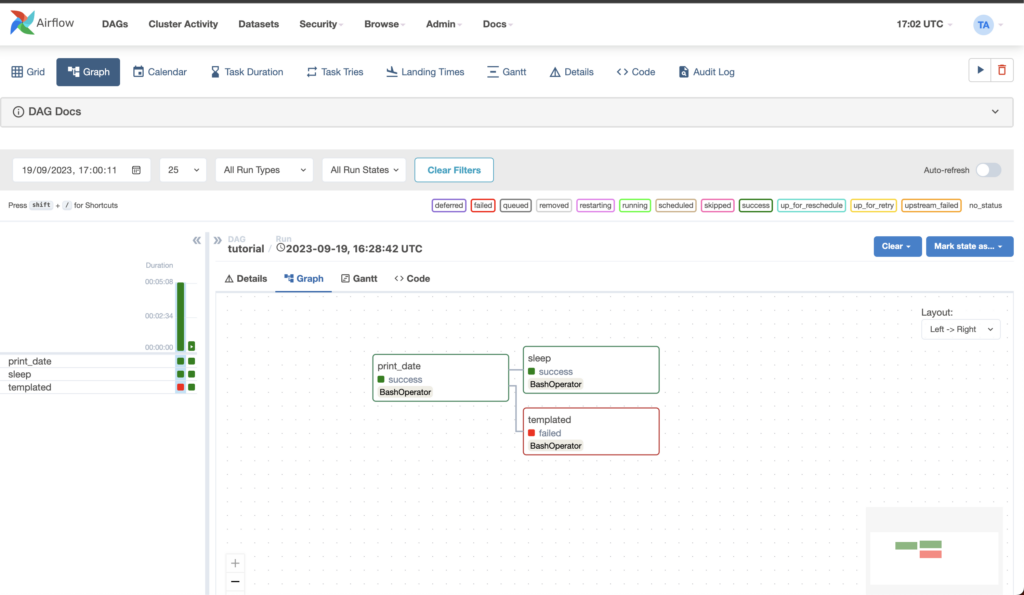

1.) Apache Airflow

G2 Rating: 4.3 out of 5 stars (86)

Apache Airflow is a widely used and popular open-source tool for orchestrating workflows as DAGs (Directed Acyclic Graphs). It is highly customizable and scalable, which makes it an ideal choice for users who work with big data.

Features:

- It supports integration with multiple platforms and destinations.

- Standard Python knowledge is required to set up workflows.

- Monitor and schedule workflows with a modern web application.

Pricing: Free (open-source)

Ideal For: Teams who need a high level of customization and highly scalable solution.

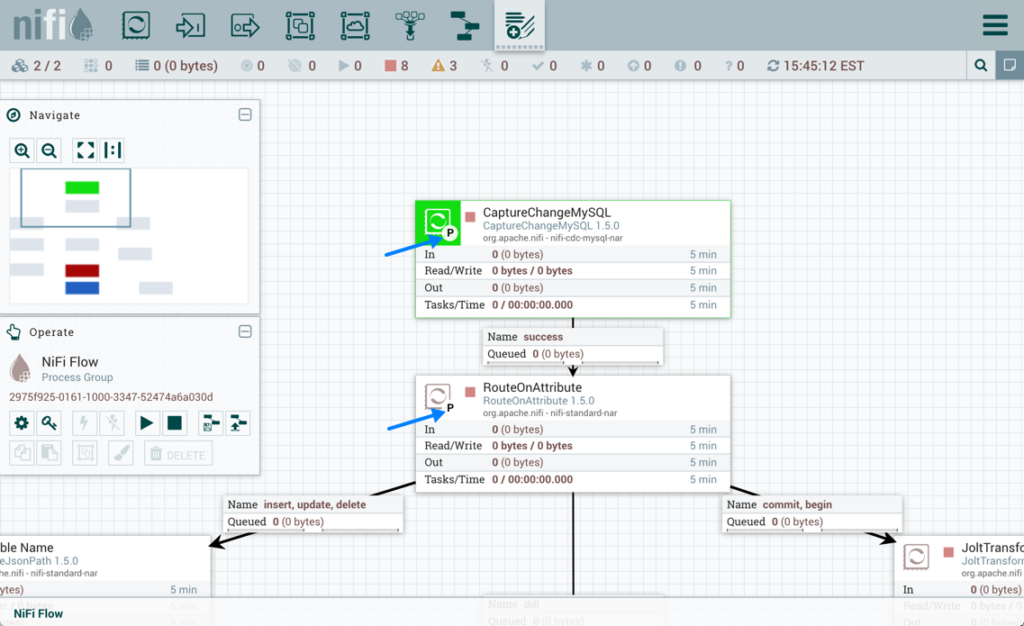

2.) Apache Nifi

G2 Rating: 4.2 out of 5 stars (24)

Apache Nifi is another one of open-source tool that automates data flow between systems with a visual interface. It has a scalable architecture and supports data ingestion as well data routing.

Features:

- NIfi provides real-time monitoring and reporting.

- Its asynchronous nature promotes high throughput.

- Low latency and dynamic prioritization.

Pricing: Free (open-source)

Ideal For: Apache Nifi is ideal for organizations needing a simple and secure data flow management solution.

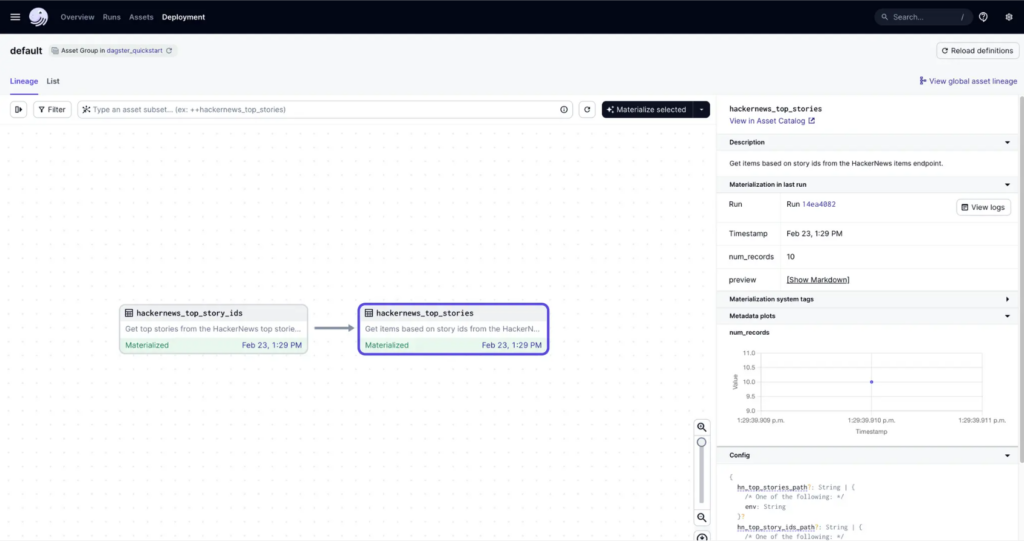

3.) Dagster

Dagster is a data orchestration tool designed for building and maintaining robust data pipelines. It provides strong data observability features.

Features:

- It has a modern, flexible, fault-tolerant architecture.

- It provides integrations with the most popular data tools.

- It offers fully serverless or hybrid deployment options.

Pricing: Free( open-source). It also offers enterprise pricing models, such as Solo, Starter, and Pro.

Ideal For: Dagster should be your go-to choice if you want to build a robust, testable, and maintainable pipeline.

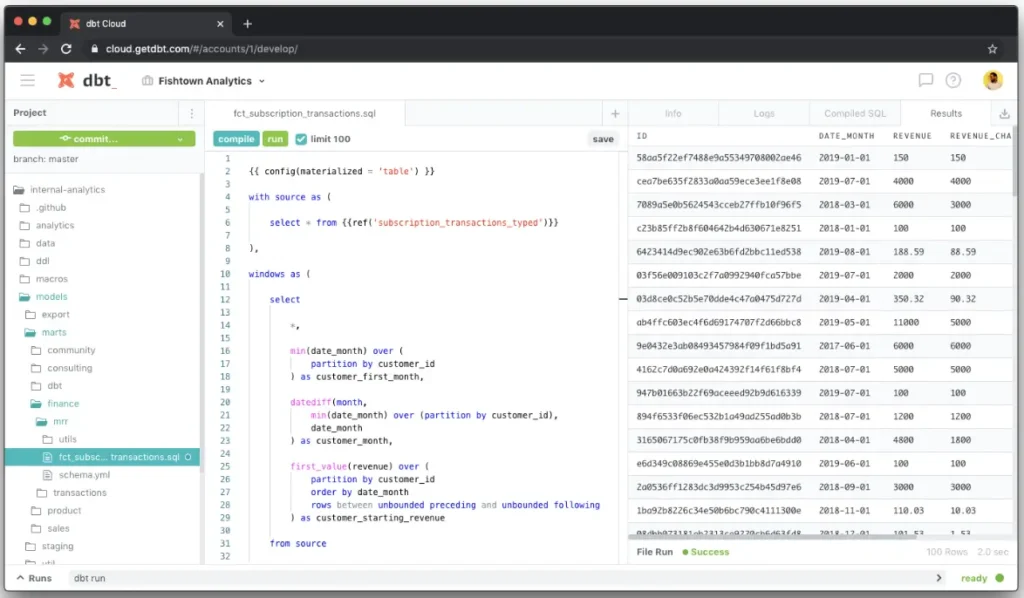

4.) dbt

G2 Rating: 4.8 out of 5 stars (159)

dbt (data build tool) is a widely used open-source tool for transforming data. It is a developer-friendly tool that integrates seamlessly with modern data tools and technologies.

Features:

- Extensive community support and resources are available.

- dbt allows transformation using SQL.

- dbt Copilot helps users automate and accelerate analytics.

Pricing: Free (open-source). Paid plans start from $100 per month per seat.

Ideal For: dbt is ideal for teams and individuals focused on data transformation and analytics.

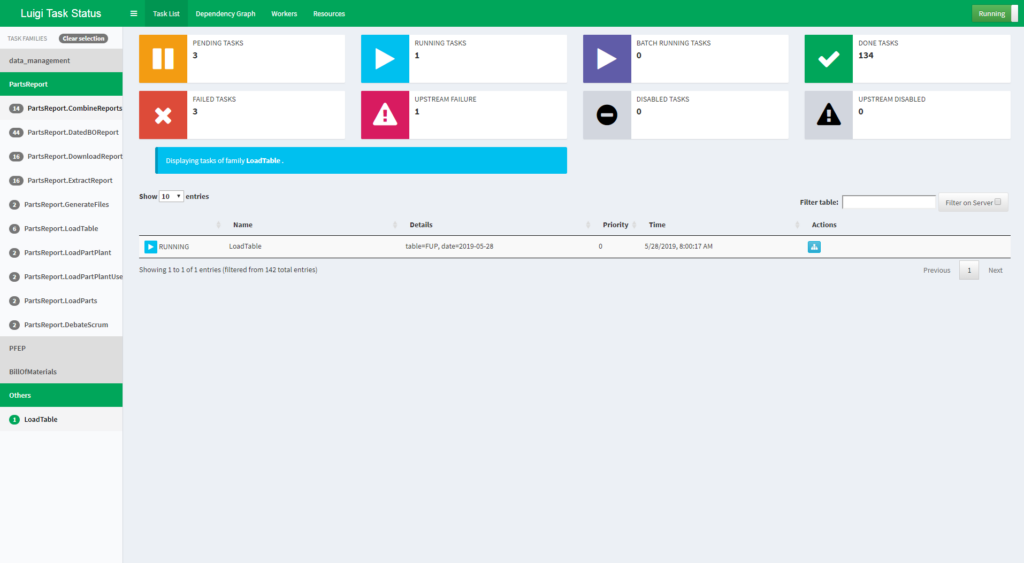

5.) Luigi

Luigi is an open-source Python module that can be used to build and manage batch pipelines. It was built at Spotify and now is an open-source repository on GitHub. Spotify’s data team still maintains it.

Features:

- It has a lightweight design.

- Luigi has built-in Hadoop support.

- It handles both dependency resolution and visualizations very well.

Pricing: Free(open source)

Ideal For: Luigi is ideal for Lightweight and dependency-based workflow management.

Conclusion

DataOps tools allow organizations to streamline workflows, ensure data quality, and foster collaboration. In this article, we discussed a wide range of tools, from comprehensive enterprise solutions to open-source ones. There is something for every need and budget. Selecting the right tool involves understanding the needs and requirements, such as ease of use, integration capabilities, etc. Tools like Alteryx and Databricks cater to advanced analytics and wide-ranging needs, whereas open-source solutions such as Apache Airflow and Dagster offer flexibility for technically savvy teams.

For teams seeking scalability as well as ease of use, Hevo Data is a standout option. Its no-code interface and fault-tolerant architecture make it an invaluable tool for your DataOps strategy. Sign up for a 14-day free trial and experience the feature-rich Hevo suite firsthand.

FAQs

1. What are the key components of DataOps?

Key components of DataOps include data pipeline automation, monitoring, CI/CD workflows, data quality management, and cross-functional collaboration.

2. What is the DataOps platform?

A DataOps platform brings together collaboration, automation, and monitoring of data pipeline processes and includes tools like dbt, Hevo Data, or Dagster.

3. What is DataOps vs DevOps?

DataOps entails data workflow management, while DevOps encompasses software development and deployment.