Today, companies are emerging with new approaches to extract the maximum possible benefits through their data in this fast-moving big data world. Of all these new approaches, perhaps the idea of the data mesh sounds the most promising after all. The data mesh is a convincing approach that can enable even decentralized architecture management of data, thereby providing efficient control at aggregate and granular levels.

Table of Contents

In this blog, we shall outline the essentials of data mesh, why it is necessary for businesses, and explore some of the various data mesh tools.

What is Data Mesh?

Data mesh is a decentralized approach towards data architecture based on domain-oriented ownership of data as well as on the building of self-serve data infrastructure. It is unlike traditional centralized data architecture, where one team owns and manages its own data. In this case, different teams within an organization assume ownership of their respective data and, therefore, treat it as a product that encourages agility, collaboration, and scalability by which organizations may respond rapidly to the evolving changes in business needs.

Principles of a Data Mesh

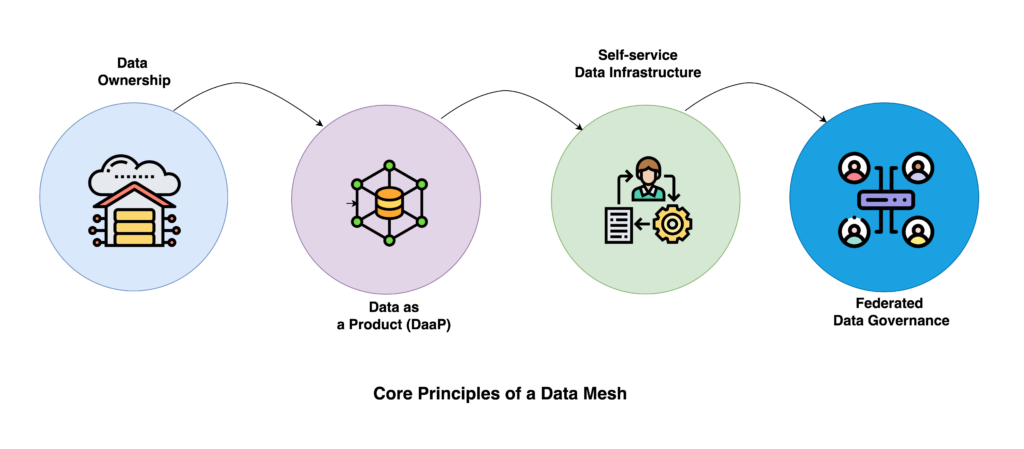

There are four basic principles of Data Mesh. They are as follows:

- Domain-driven ownership of data: Every team owns the data it creates or produces. This way, accountability, and ownership are fostered through a domain-driven approach.

- Data as a product: Data is treated just like a product, and a team dedicated to it focuses on its quality and usability.

- Self-service data infrastructure platform: Organizations and companies need to invest in platforms that allow teams to access their data assets independently.

- Federated computational governance: Governance must be maintained collaboratively to achieve compliance, yet teams are allowed flexibility.

Take a look at the above four principles in detail to get a better understanding of the same.

Hevo is a no-code data pipeline tool that helps you build and maintain data pipelines from various sources to destinations in minutes. Its fault-tolerant architecture ensures that your data is never lost. With industry-leading features like:

- 150+ pre-built connectors for seamless data migration

- Support for both pre-load and post-load data transformations, with a drag-and-drop interface allowing users with no coding knowledge to perform complex transformations

- Automatic schema mapping, eliminating the need for manual mapping from source to destination

- HIPAA, SOC2, and GDPR compliance, ensuring your data is always secure

Hevo is the perfect tool to integrate to your data mesh architecture as a data pipeline. Don’t just take our word for it, try it yourself!

Get Started with Hevo for FreeVarious Types of Data Mesh Tools for Modern Data Management

Data Storage Tools

Scaling and efficient data storage tools help support data mesh architecture. These tools will better manage and store data.

- Google BigQuery: Google BigQuery is Google’s big data warehouse service. It can store large volumes of data and perform analysis on it. It is a fully managed, serverless architecture service. BigQuery serves as an excellent storage option for businesses that require scalable data solutions and robust analytics. Additionally, it offers integrated machine-learning features.

- Snowflake: Snowflake is a data warehouse as a service hosted on the cloud. Its easy-to-use interface and high scalability feature allow organizations to not only store data but also perform analytics on it. Snowflake provides a wide range of integrations to facilitate the easy movement of data from various sources. The Snowflake data warehouse can be hosted on three cloud service providers, i.e., AWS, Google Cloud Platform, and Microsoft Azure. It is a fully managed cloud data warehouse, and users don’t have to worry about maintenance.

Take a look at the importance of data management to work with your data seamlessly.

Data Cataloging Tools

Data cataloging is one of the crucial part of a data mesh architecture. They enable teams to share knowledge simply because, through this, data is contextualized so that different users are on the same page.

- Alation: Alation is a widely used data cataloging tool, which offers all the standard features of data cataloging and employs machine learning to help organizations manage their metadata and promote data stewardship. It provides the users access to the metadata description, lineage, and documentation of the data assets. Alation offers access to more than 100 connectors to manage the data assets with maximum efficiency.

- Collibra: Widely known as a data cataloging software that comes with a business glossary and metadata in a graph form. It also has artificial intelligence and machine learning elements to ease and quicken the cataloging of data. A Blend of Collibra‘s Advanced features and friendly Interface tells why data discovery and governance are not a problem. It has a number of data lineage and collaboration features, which in turn makes managing metadata easy. Similarly to Alation, Collibra allows you to attach your data assets to more than 100 integrations.

Data Pipeline Tools

Data pipelines play a important role in data mesh architecture by providing seamless integraton from various sources to destinations such as data warehouses, data lakes and databases.

- Hevo Data: Hevo is a no-code data pipeline platform that migrates from source to destination seamlessly. It offers 150+ pre-built connectors to make the integration process smooth. Hevo supports pre-load and post-load transformations to ensure that your data is always in an analysis-ready format. Its fault-tolerant architecture, as well as end-to-end encryption, ensures that your data is always available, with nothing lost in translation. Hevo offers cost-effective pricing with various tiers so as to meet the diverse needs of businesses.

- Matillion: Matillion is a cloud data pipeline tool designed to build and maintain data pipelines. It follows an ELT approach for data transformation, i.e., post-load transformations. Matillion integrates well with data warehouses such as Amazon Redshift, Google BigQuery, and Snowflake. It allows users to perform complex transformations with pre-built components or by writing custom SQL.

Data Quality Tools

Data quality tools are designed to help organizations ensure that their data is accurate and reliable. These tools automatically implement various functions to monitor and improve the data set’s quality.

- Ataccama Data Quality: Ataccama is a famous data quality and governance tool that improves data accuracy and quality. It also reduces inconsistencies and protects and masks sensitive data. Ataccama is the best choice for companies looking to improve data quality with artificial intelligence. It continuously monitors the data set and gives real-time alerts and reports. It also provides various other functionalities, such as metadata management, lineage, etc.

- Informatica Cloud Data Quality: It is Informatica’s answer to ensuring data quality. It helps companies identify, resolve, and monitor data quality issues across datasets and applications. It works well with other Informatica services and enhances overall data management. With the help of AI, It automates the process of anomaly detection to ensure data is always ready for analysis.

Data Visualization and Reporting

Visualization tools are essential in helping organizations make data-driven decisions from their data. These tools allow users to create dashboards and reports.

- Tableau: Tableau is a highly efficient data visualization software that is able to design awe-inspiring dashboards. It is the preferred tool for organizations that require data for analysis and even performs visualizations for any business decision-making. It has a very user-oriented interface that encourages people from different fields of work but with no programming skills to use the product. It designs and develops effective data characterization techniques, such as a variety of charts and graphs.

- Power BI: Power BI is a powerful business analytics tool by Microsoft that, similar to Tableau, enables businesses to visualize their data and share their insights. It integrates well with all other Microsoft services and various data sources. It is a highly scalable platform that promotes data mesh’s self-service capabilities. Its easy-to-use interface allows users to perform business intelligence tasks in minutes.

Information Sharing and Collaboration Tools

Tools for collaboration and data sharing are essential for teamwork in a data mesh environment. These tools allow teams to collaborate easily and ensure everyone is on the same page regarding data assets.

- Slack: Slack is one of the most used collaboration tools. With its easy-to-use interface, it transforms how people in an organization communicate. It helps people stay connected with co-workers and people outside of the organization. The feature that makes it the perfect tool for data mesh architecture is that it ensures everyone in the organization has access to the same shared information.

- Notion: Notion is another tool for sharing information and collaboration. Its built-in AI finds whatever you want in Notion or its connected apps, thus promoting collaboration. Notion combines notes and databases and allows users to collaborate on data projects. It is one of the data mesh tools that not only helps you organize data but also seamlessly shares it with anyone in the organization.

Factors to Consider When Choosing Data Mesh Tools

There are a variety of factors that you should consider when choosing data mesh tools for your organization. All tools might not check all the boxes, so select the factors that are important for your data needs and then make a decision.

- Scalability: This is the most important factor to consider since data needs are volatile and can scale up or down quickly. Choose a tool that scales easily according to your data needs.

- Ease of Integration: The data mesh tool you choose must integrate well and easily with your organization’s existing data infrastructure and frameworks. This will enable seamless data management.

- Self-Service Capabilities: Choose a tool that allows teams to access and manage data without relying on heavy IT resources. One of the principles of data mesh architecture is self-service data infrastructure; make sure to follow decentralization.

- Pricing and Cost: The pricing and cost of data mesh tools must be appropriately evaluated to ensure that they meet your budgetary goals and are flexible in case your data needs change in the future.

- Support & Documentation: This may not seem like an essential factor at first, but support and documentation play a major role in the long term. Ensure practical solutions in the way of sufficient support and documentation are given in the tool you go for in case you experience difficulties.

- Security & Compliance: Security and compliance are criteria relevant to all the tools to be selected to ensure data safety. Ensure that the tools you select will have a high encryption level and comply with international security certification standards.

Finally, you can also check out how the data mesh architecture works to strengthen your data mesh concepts.

Conclusion

Implementing a data mesh approach necessitates numerous tools, each one with its own functions and features. This article has streamlined some of the aspects of data mesh architecture and the necessary tools for its effective implementation. Data meshes can be effectively adopted by organizations with the help of data catalogues, pipelines, storage, quality management, visualisation and collaboration tools. In this case, all the aspects mentioned are essential before drawing up the most suitable tools for one’s needs.

Another tool that can help during your data mesh journey is Hevo Data. The tool features 150+ pre-built connectors that help easily integrate multiple sources and destinations for data migration. Sign up for Hevo’s 14-day free trial and experience seamless data migration.

FAQs

1. Is data mesh a tool?

Data mesh is not a tool but an approach towards data management. It is an architectural change from traditional data architectures to a new decentralized one using various tools.

2. What are the 4 pillars of data mesh?

The four pillars of data mesh architecture are Domain-driven ownership of data, Data as a product, Self-service data infrastructure, and Federated computational governance.

3. Is data mesh a framework?

Yes, a data mesh is an architectural framework and approach that follows four principles: domain-driven ownership of data, Data as a product, Self-service data infrastructure, and Federated computational governance.