67% of enterprises are relying on data integration to support analytics and BI platforms today, and 24% are planning to in the next 12 months. Businesses produce enormous amounts of data from various sources in this data-driven world. These may include customer interactions, IoT devices, web logs, transactional systems, and external APIs. This data often exists in silos, making it difficult for businesses to analyze and collaborate. Data integration unifies data from disparate sources into a uniform system that can be analyzed efficiently. This process is critical for organizations that want to use data to drive growth, make better decision,s and get ahead of the competition.

Table of Contents

We will explore the most important parts of big data integration in this blog, including its components, challenges, tools and best practices.

Overview of Big Data Integration

Big Data Integration is the process of consolidating structured, semi-structured, and unstructured data from various systems to provide a consistent view. The objective is to make data accurate, actionable, and accessible for reporting, analytics, and operational requirements. It is different from traditional integration processes as it deals with much larger amounts of data and needs more advanced tools and methods to handle complexity, velocity, and diversity.

Big Data Integration vs. Data Integration

| Aspect | Big Data Integration | Data Integration |

| Definition | Combining massive datasets from various structured, semi-structured, and unstructured sources. | Consolidating data from multiple sources into a single format. |

| Focus | Handling large volumes, variety, and velocity of data for advanced analytics. | Organizing data for standardized reporting and analysis. |

| Use Cases | Machine learning models, real-time analytics, IoT data processing. | Business intelligence platforms, data warehouses. |

| Outcome | Scalable insights from complex data. | Unified, standardized datasets for reporting. |

Example:

- Data Integration: Consolidating sales data from multiple branches into a centralized database for monthly reporting.

- Big Data Integration: Streaming real-time sensor data from IoT devices to detect anomalies.

Key Components of Big Data Integration

1. Data Ingestion

This is the process of collecting data from different sources. These sources can include:

- Relational databases

- Cloud storage platforms

- APIs

- IoT devices and sensors

For instance, a retail business may use online platforms and point-of-sale systems to gather data about customer purchases to examine purchasing patterns.

2. Data Transformation

Once data is ingested, it must be cleaned, normalized, and formatted for analysis. The standard transformation processes include:

- Removing duplicates

- Handling missing values

- Converting formats

- Aggregating data

3. Data Storage

After processing, the data is kept in repositories such as:

- Data Warehouses: For structured data used in business intelligence.

- Data Lakes: For unstructured or semi-structured data, use data lakes.

- Hybrid Storage: A combination of both data lakes and warehouses, such as Snowflake.

4. Data Orchestration

The seamless data transfer between multiple systems is ensured by data orchestration. Workflows are automated using tools like Kubernetes, enabling effective data pipelines.

5. Data Governance

Data governance refers to procedures and rules that ensure data protection, compliance, and quality. This includes keeping data accurate, documenting data lineage, and putting access controls in place.

Businesses can create scalable and effective data ecosystems by successfully integrating these elements.

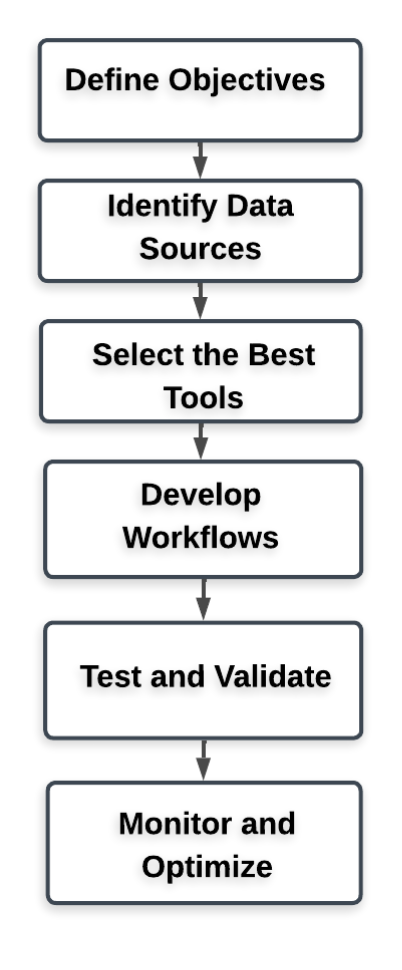

Steps to Implement Big Data Integration

Step 1: Define Objectives

Before integrating data, clearly outline your goals. Do you want your reporting to be better? Turn on analytics in real-time? Recognize patterns? These objectives guide the integration process.

Step 2: Identify Data Sources

Catalog all internal and external data sources. Examples include CRM systems, social media platforms, and IoT sensors. You should classify your data accordingly as unstructured, semi-structured, and structured.

Step 3: Select Integration Tools

Choose the best tools that align with your requirements.

- Apache NiFi is used for real-time data ingestion.

- Hevo Data can be used for ETL processes.

- AWS Glue is best for serverless data preparation.

Step 4: Develop Workflows

Simplify data ingestion, transformation, and storage by implementing automated workflows. Errors are reduce,d and manual intervention is minimized.

Step 5: Test and Validate

Perform rigorous testing to ensure data accuracy, consistency, and compliance. Use data validation techniques to identify and resolve issues.

Step 6: Monitor and Optimize

Set up monitoring systems to track the performance of your data pipelines. Optimize workflows to handle growing data volumes and new sources.

Best Practices for Big Data Integration

- Prioritize Data Quality

Data quality is crucial for analytics. Clean and normalize data before integrating it to avoid garbage-in and garbage-out scenarios. This involves standardizing formats, fixing missing or inaccurate data, and removing errors. High-quality data ensures better decision-making and reliable analytics. - Automate Repetitive Tasks

Automation tools reduce human error and improve efficiency in tasks like data ingestion and transformation. It allows teams to focus on more strategic tasks while maintaining consistency across workflows. Use automation tools like ETL (Extract, Transform, Load) platforms, data pipelines, or scripts that handle repetitive tasks. - Adopt Scalable Architectures

Select tools and platforms that can scale with your organization’s growth, such as cloud-based storage solutions. Scalability ensures that your infrastructure can sustain future growth and minimize disruptions in services. As organizations grow, the data volume and variety also grow. Use cloud-based platforms like AWS, Azure, or Google Cloud or adopt distributed computing frameworks. - Ensure Data Security

Implement robust security measures like encryption, role-based access controls, and regular audits to protect sensitive data. Security breaches can lead to huge losses including financial and legal issues and huge reputational damage. The biggest data breach in history occurred in 2016 when Yahoo revealed that 3 billion users had been compromised in a series of data breaches between 2013 and 2014. There were 41 class-action lawsuits and a hefty fine of 35 million dollars for not disclosing the full details of the breaches. A strong security framework would have led to a different story.

Big Data Integration Tools

1. Hevo Data

Hevo Data is a platform that automates data flows and does not require any coding. It is compatible with more than 150 sources. Hevo Data is an excellent choice for integrating data into well-known warehouses such as Snowflake or Google BigQuery. Hevo Data is best for businesses that want a simple plug-and-play solution to streamline data ingestion into data warehouses.

2. Apache NiFi

An open-source tool with a user-friendly interface, Apache NiFi enables real-time data ingestion and transformation. Its drag-and-drop interface simplifies building complex workflows. It is ideal for organizations managing real-time data streams, such as IoT systems or event-driven architectures.

3. Talend

Talend provides a set of tools that can be used for ETL procedures, data quality control, and data integration. It is widely used for handling large-scale data projects. It is suitable for enterprises that require robust data processing capabilities for big data and hybrid cloud environments.

Examples and Use Cases

1. Retail

Retailers incorporate data from point-of-sale (POS) systems, internet platforms, and social media communities when it comes to analyzing customer behavior and improving marketing strategies.

2. Healthcare

IoT device data, genomic data, and patient records are consolidated by hospitals and research organizations to make treatment more personalized and improve patient outcomes.

3. Finance

Banks integrate transaction data, fraud detection logs, and credit scores to enhance risk management and offer tailored financial products.

4. Smart Cities

IoT data, traffic sensor feeds, and energy consumption statistics are used by urban planners to make cities run more efficiently. It reduces the negative impact on the environment.

Why is Big Data Integration Important?

1. Improved Decision-Making

Integrated data provides a holistic view, enabling more informed decisions based on comprehensive insights.

2. Operational Efficiency

Companies save time, money and mistakes by automating the processes that handle data.

3. Enhanced Customer Experience

Businesses can offer personalized services and meet customer demands more quickly when they have unified customer data.

4. Regulatory Compliance

With centralized data management, organizations can easily meet legal and regulatory requirements, such as GDPR or HIPAA.

Challenges of Big Data Integration

1. Data Silos

It can be difficult to integrate data from different sources. It can be even more challenging when legacy infrastructures are involved. Different formats and structures of data from various sources add complexity.

2. Data Quality Issues

Inconsistencies and missing numbers must be addressed since poor data can produce erroneous insights. Poor data quality results in bad insights.

3. Scalability

Big Data systems need to scale to accommodate growing volumes, velocity and variety of data. Scalable solutions are necessary to handle increasing data volumes. They can be resource-intensive.

4. Security Risks

Data centralization makes a system more vulnerable to hackers. Strong security measures are necessary. Breaches can lead to data loss, reputational damage and non-compliance with regulations like GDPR or HIPAA.

Future of Data in the Context of Big Data

Real-time analytics, edge computing and artificial intelligence developments are driving the future of big data integration. Here are some emerging trends:

- AI-driven Automation: The process of automating data integration operations using machine learning models. Organizations can process data faster and reduce manual work, enabling teams to focus on higher-value activities.

- Edge Computing: Processing of data closer to its source in order to reduce latency. Enables faster decision-making and improves the scalability of Big Data systems, particularly in distributed environments.

- Hybrid Cloud Solutions: Seamlessly integrating on-premises and cloud-based systems for maximum flexibility. It allows organizations to scale their operations while maintaining control over critical data.

As the future brings new evolutions to technology, businesses will have access to integration solutions that are far more effective, scalable and intelligent.

Conclusion

Big data integration is now an essential component of modern business strategies. Businesses can streamline their operations by integrating data from multiple sources. Tools like Apache NiFi, Hevo Data and Talend simplify integration, offering scalability and real-time processing to handle large datasets effectively. Challenges like security risks and data silos will always exist but big data and advanced tools ensure successful integration. The digital landscape keeps on evolving; big data integration will continue to shape the future of business intelligence and innovation.

Try a 14-day free trial and experience the feature-rich Hevo suite firsthand. Also, check out our unbeatable pricing to choose the best plan for your organization.

Frequently Asked Questions

1. What are examples of data integration?

Examples of data integration include combining customer data from CRM systems and social media to create a unified customer view or combining transaction data and credit histories for risk assessment by banks.

2. What are the four pillars of big data?

The four pillars of big data are Volume, Variety, Velocity and Veracity. Big data is characterized by its huge volume, numerous types, rapid data generation and reliability.

3. Why is it necessary to integrate big data for business?

Businesses can get useful information from different sources of data by integrating big data. This helps them make better decisions, run their operations more efficiently and give better customer experiences.