Strong data reliability and accuracy are required for making sound business judgments and winning strategies. Decision-makers can be certain that they will be able to use accurate and reliable data to support their strategy. However, inaccurate or confusing data can lead to poor analytics, which can be harmful to a variety of business challenges. Unreliable data might lead a firm in a false direction, but reliable information essentially ensures the choices made are useful and well-informed.

Table of Contents

In this blog, data validation and data quality are two important concepts that ensure that data is accurate and reliable for making business decisions. Data validation is the process of checking the data before it is entered into the system for completeness and accuracy and making sure it complies with predefined standards and criteria. Data validation is important to make sure the data is reliable before it is used. On the other hand, data quality ensures the usefulness and reliability of the overall state of data. It focuses on the suitability of the intended use of data over time. Now, let’s understand the key points of data validation vs data quality in this blog.

What is Data Validation?

Data Validation is the verification of data that can take place at various stages such as, before the data is entered into the system, after it is stored or during the data processing stage. It includes checking that data that meets the required criteria. It involves applying checks and rules to ensure it is complete, meaningful, accurate, secure, and conforms to specific ranges and formats. It is implemented through validation rules, checks, and constraints that prevent inconsistent or incorrect data from entering the system.

Looking for the best ETL tools to connect your data sources and migrate your data correctly? Rest assured, Hevo’s no-code platform helps streamline your ETL process. Try Hevo and equip your team to:

- Integrate data from 150+ sources(60+ free sources).

- Utilize drag-and-drop and custom Python script features to transform your data.

- Risk management and security framework for cloud-based systems with SOC2 Compliance.

Try Hevo and discover why 2000+ customers have chosen Hevo over tools like AWS DMS to upgrade to a modern data stack.

Get Started with Hevo for FreeKey objectives of data validation:

- Ensuring Data Correctness: Validating that data entries are accurate and according to predefined checks. It includes :

- Data type validation that ensures the entered data is according to the expected data type (e.g., String, Data, Integer, Float, Boolean) This ensures each data entry is suitable for its field. For example, the user’s age should be an integer (e.g., 40), not a decimal or text. A field designated for boolean choices (e.g. Yes/No or True/False), like for a subscription status, should only accept binary choices “Yes” or “No”.

- Range and Constraint Validation ensures that data values are within specified constraints. For example, an age field should be in a specific range, such as 0 – 120 years. An age of 150 to -30 should be flagged as invalid.

- Code and Cross-Reference Validation that ensures that data entries match valid values and references in databases or predefined lists. For example postcode validation makes sure that postal codes match correct codes within a certain area and adhere to a certain format. For example, country code validation checks if the country code, such as “AU” for Australia or “US” for the United States of America, meets an established list of approved ISO country codes. The US” would be flagged as invalid.

- Maintaining Data Consistency: Ensuring that data is uniform across all records. It includes:

- Structured Validation ensures that data is according to predefined format and structure. For example, An ‘order ID’ should follow a format which might be predefined as “OR-ID-12345678”. An entry of “OR-ID-34598720” will be accepted while an entry of “ORD-45-IPI” will fail the structured validation.

- Consistency Validation verifies that data is uniform across the database. For example, if a customer’s “Phone Number” is changed in the Personal contact details section, it should match the corresponding field in “Shipping details.” Any differences should be highlighted as wrong so that they can be fixed.

What is Data Quality

Data Quality refers to the extent to which data aligns with a company’s standards for accuracy, validity, reliability, completeness, and consistency. Data Quality affects business decisions. If data quality is not reliable and accurate then it can result in misguided reports. Good data can result in better decisions while bad data results in inaccurate analytics and bad decision making.

Key Attributes of Data Quality:

Here are some of the key components of Data Quality are as follows:

- Accuracy: This implies how well data, arising from dependable and trustworthy sources, reflects the true values or conditions it is supposed to convey and whether its reliable.

- Completeness: This key component of data quality verifies that the data has all the information required for analysis and evaluation

- Consistency: This guarantees that data is consistent across all platforms and does not clash between different datasets. It also includes maintaining reliability throughout the data lifecycle.

- Timeliness: Regular data retrieval is important in order to maintain the relevancy of data with the current condition. This key component of data quality checks that the data is up-to-date. It also involves that data can be easily and promptly available whenever it is required without any delay.

- Relevance: It ensures that the data provides relevant and actionable information. If data is aligned with user needs, it enhances decision-making and supports effective outcomes. Conversely, data that doesn’t meet these needs can lead to inefficiencies and misinformed decisions.

- Uniqueness: This ensures that each data record is distinct and free from unnecessary duplication, with no redundant entries.

- Validity: This checks whether data adheres to predefined formats, rules, or standards, confirming that it conforms to the required criteria.

Criteria Comparison: Data Validation Vs Data Quality

| Criteria | Data Validation | Data Quality |

| Definition | The process of making sure that data follows all the predefined constraints and standards. | The measure of how well data meets certain characteristics such as accuracy, completeness, and relevance. |

| Objective | To ensure that data entries are accurate, valid, and adhere to predefined criteria. | To assess and enhance the overall integrity, reliability, and usefulness of data throughout its lifecycle. |

| Scope | Focuses on validating data at the point of entry or processing to meet specific rules. | Encompasses the entire data lifecycle, including data creation, storage, maintenance, and usage. |

| Focus Areas | Data type, range, format, and cross-reference checks. | Accuracy, completeness, consistency, timeliness, and relevance of data. |

| Tools | Data validation tools, validation rules in databases, and data entry forms. | Data quality management tools, data profiling tools, and data cleansing tools. |

| Examples | Ensuring a date field only accepts dates. | Analyzing sales data for accuracy, consistency, and completeness. |

| Impact on Data | Ensures data follows specific rules and constraints in order to reduce errors and to avoid the invalid values from entering the system. | It enhances the overall reliability and usability of data. |

Key Differences Between Data Validation and Data Quality

- Scope: Data validation focuses on ensuring that data entries meet set criteria and regulations at the point of entry or during processing. Its major goal is to guarantee that individual data items adhere to the established standards, such as proper data types, formats, and ranges.

Whereas, Data Quality takes a broader view, addressing the total integrity and usability of data throughout its lifecycle. It entails assessing and enhancing many aspects of data, such as correctness, completeness, consistency, timeliness, and relevance.ata, such as correctness, completeness, consistency, timeliness, and relevance. - Timing: Data Validation is performed at specific stages of data handling, such as during data entry or processing. This makes sure that data follows set rules before it’s saved or used. It’s a reactive approach that aims to catch and fix mistakes as data is entered or changed. This helps prevent errors from affecting the data’s integrity. On the other hand, managing data quality is an ongoing, proactive effort to keep data accurate and reliable over time. It checks that during different phases of data, it remains relevant and up-to-date.

- Tools and Techniques: Common tools and methods for data validation include data entry forms with built-in checks, automated scripts that enforce particular data limitations, and validation rules like ranges, formats, and types are embedded within databases. For example, for small and medium size datasets you can do data validation using excel or google sheets.

If you go to the Data tab in Microsoft Excel you can see Data Validation which you can select to predefine some rules for data.

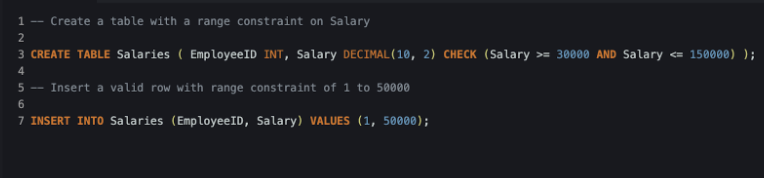

SQL queries can be used for the data validation. For example:

CREATE Table Salaries ();

INSERT INTO Salaries () VALUES();If you have a large size dataset or you have your data in a database so you can use SQL stored procedures or triggers. You can also use Machine learning techniques to validate dataset by using techniques like Natural language Processing (NLP) for textual data and deep neural networks in case of complex data validation.

Managing data quality uses tools like dashboards, cleaning software, profiling tools, and frameworks like Apache Deeque. These tools help spot and fix errors, fill in missing information, and keep data consistent across different sources. Data quality is also improved through good data management practices.

How Data Validation Supports Data Quality

Data quality is supported by data validation. Data Validation verifies data entries to check for any mistakes, and in return, it helps with the quality of data. For example, it makes sure that phone numbers are entered as numeric values only—never text, boolean, or floating points. It uses the same rules for data fields, like making sure all dates are in the same format.

By requiring important fields, it ensures that all needed data is entered, making the dataset more complete. It checks if data falls within set ranges and formats. It also checks and prevents duplicate entries, reducing redundancy and keeping data unique. In order to improve data reliability for accurate analysis and decision-making, it is very important to detect any errors early on in the process.

Uniform criteria are applied to data fields to provide uniformity among records, such as standardizing date formats. Enforcing essential fields it guarantees that all necessary data is input, enhancing the completeness of the dataset. Data is compared to predetermined ranges and formats. It reduces redundancy and preserves uniqueness by identifying and preventing duplicate entries. Errors are detected early on, improving data reliability for precise analysis and decision-making.

Common Challenges in Data Validation and Data Quality

- Data Validation Challenges:

- Handling Complex Data Types and Relationships: It can be very time consuming and difficult to validate data with complicated structures, such as nested records or complex relationships. In order to ensure that data adheres to rules across these complex scenarios often requires sophisticated validation logic and tools.

- Managing Validation Rules Across Large Datasets: Data around us is expanding everyday. As the dataset size increases, it becomes more difficult to maintain reliability and apply data validation rules. It can also be very costly to ensure that rules are consistently applied on entities and that validation processes do not create any delays.

- Data Quality Challenges:

- Ensuring Consistent Data Quality Across Disparate Systems: When data is integrated from various sources and datasets, it can lead to various errors. To maintain high data quality across various systems, you need effective strategies for integrating data. This involves making sure data is consistent and accurate as it’s brought together. Check out our blog on Data Quality Challenges to know more.

- Addressing Data Quality Issues in Real-Time Environments: Addressing data quality issues is very crucial when the data is processed and analyzed in real-time. Some real-time systems are used for critical decision making like in the field of medicine. Therefore, real-time systems must be capable of quickly identifying and correcting data quality problems to prevent them from impacting operations and decision-making.

Best Practices for Implementing Data Validation and Data Quality

- Data Validation: Two of the best practices for implementing data validation include the following:

- Validation rules and criteria should be clearly defined in order to ensure consistency and accuracy. This includes setting rules for data types, formats, and ranges.

- Automated processes should be used for validation. It includes using automated tools and scripts to apply validation rules consistently across datasets. Automation reduces the risk of human error and ensures that validation checks are performed efficiently and accurately.

- Data Quality: Some of the best practices for implementing data quality include the following:

- Data Governance should be implemented. Data governance frameworks should be enforced to support data quality initiatives. This includes establishing roles, responsibilities, and policies for managing and maintaining data quality across the organization.

- Regular data audits should be performed to identify and address quality issues. Continuous improvement practices should be implemented to refine data quality processes and adapt to evolving data needs and standards.

Learn more about How to Improve Data Quality through our blogs.

Conclusion

Efficient decision-making and effective management of data need both data validation and data quality. Without data validation, data quality cannot be ensured. Data validation is the initial step in data quality management. It sets certain rules and constraints on various data attributes to prevent erroneous data from entering the system. Data quality covers the general usefulness and accuracy of data during the whole data lifecycle. Data quality ensures the trustworthiness and reliability of data over time, while data validation sets the foundation for it during initial phases.

Hevo Data is a no-code data pipeline platform which supports more than 150+ connectors, making it a standout choice for businesses looking for a reliable, cost-effective, and user-friendly platform. Sign up for Hevo’s 14-day free trial and experience seamless data integration.

Frequently Asked Questions

1. What is the difference between data validation and data integrity?

During the initial data lifecycle, data validation ensures that data complies with the preset rules and constraints. While data integrity ensures accuracy, consistency and dependability of data during its whole lifecycle.

2. What is the difference between data accuracy and data validation?

Data accuracy is how true the values are in a dataset. Data validation is the process of checking the correctness of data before it can enter the system.

3. What is the difference between data quality and data assurance?

Data assurance is basically the process used to guarantee that the data is reliable and of good quality. While Data quality is the entire state of consistency, correctness, completeness and reliability of data.

4. What is the difference between data quality and data accuracy?

Data quality is the entire state of consistency, correctness, completeness, and reliability of data. Data accuracy is how true the values are in a dataset.