Two key practices to improve workflows and outcomes are DevOps and DataOps. But what do these terms mean, and how do they differ when it comes to transforming data?

Table of Contents

In this post on DataOps vs DevOps, we’ll define each practice, outline core principles, compare their roles in workflows, highlight overlaps and differences, and discuss how each supports efficient, scalable, and reliable data transformation along with real-world implementation scenarios. To go chronologically, we’ll start with DevOps.

What is DevOps?

DevOps (Development + Operations) is a culture and set of practices that focus on collaboration and automation between software development (Dev) and IT operations (Ops) teams to deliver more reliable solutions faster.

It was created to bridge the gap between developers (who built the applications) and the operations teams (who deployed and maintained them). By unifying these traditionally separate functions, all members of both Dev and Ops teams work together, achieving shorter release cycles.

At its core, DevOps focuses on automating and streamlining the software release process. Teams rely on version control (like Git, SVN) for tracking changes, code reviews, and rollbacks, and on infrastructure-as-code and containerization to achieve consistent deployments across environments.

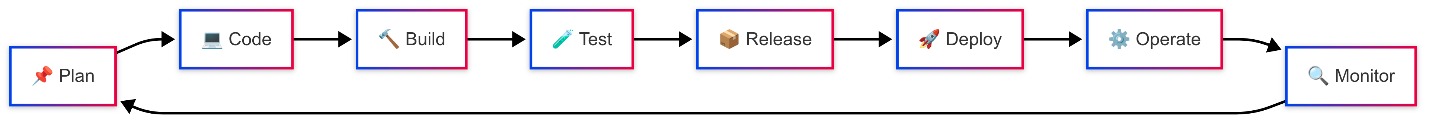

The typical DevOps lifecycle is a continuous loop of planning, coding, building, testing, releasing, deploying, and monitoring software in short cycles. Everyone from developers to operations and QA collaborates in this loop, supported by automation and monitoring tools, to deliver features faster and with higher quality.

DevOps Core Principles & Focus

- Collaboration and Culture: DevOps requires a cultural shift toward teamwork and breaking down barriers between dev and ops. All roles work towards a common goal of delivering value, rather than operating in isolation.

- Automation and CI/CD: Automation is central, from continuous integration of code changes to automated testing and deployment, for minimizing human error and speeding up delivery. A CI/CD pipeline orchestrates these steps, enabling frequent, incremental releases.

- Quality and Monitoring: DevOps emphasizes quality assurance via automated tests, code scans, and continuous monitoring of applications in production. Issues are detected early, and feedback loops ensure constant improvement.

- Agility and Short Cycles: Inspired by agile methodologies, DevOps encourages minor, frequent updates rather than rare, big launches. Shorter release cycles (often weeks or even days) allow quick iteration and fast time-to-market for software features.

By applying these principles, DevOps has dramatically increased software deployment frequency and reliability in many organizations. Elite DevOps teams deploy updates far more often and with fewer failures than traditional teams.

What is DataOps?

DataOps (Data Operations) is a data management strategy, inspired by DevOps, agile, and lean principles, that applies to data analytics and data pipelines. In simple words, DataOps aims to do what DevOps did for data analytics and data transformation for software development. It focuses on improving the speed, quality, collaboration, and automation of data workflows, from data ingestion and preparation to analysis and delivery of insights, by treating data pipelines with the same rigor that DevOps applies to application pipelines.

Like DevOps, DataOps fosters a culture of cross-functional collaboration. It brings together those who need data and those who manage it, so that valuable data can be delivered faster to drive business decisions. Importantly, DataOps emphasizes automation of data pipelines, including data acquisition, ETL/ELT (Extract, Transform, Load) processes, and deployment of data transformations to eliminate manual steps and errors. This means using version control for data transformation code (SQL, scripts, etc.), automated testing of data and schemas, and orchestrated releases of data pipeline changes, much like a CI/CD process for data. By efficiently and accurately processing data from ingestion to delivery, DataOps ensures that data is timely, reliable, and of high quality for analytics.

DataOps Core Principles & Focus

- Data Pipeline Automation: Treat “data as code” by managing data transformations in source control and automating pipeline steps (ingest, clean, transform, load). Continuous integration and delivery for data changes is crucial in updating data pipelines seamlessly.

- Continuous Testing & Quality Control: Embed tests to validate data (e.g., schema checks, data quality assertions) at each stage. Monitoring data flows in real time helps catch anomalies or pipeline failures quickly, ensuring trust in data outputs.

- Collaboration Across Roles: Break down silos among data engineers, analysts, scientists, and business users. All stakeholders participate in defining, developing, and reviewing data products, as well as aligning data work with business needs and KPIs.

- Agility and Continuous Improvement: DataOps encourages iterative analytics development of small, incremental changes to datasets, models, or reports that can be delivered and evaluated rapidly. Feedback (e.g., from data consumers) is continuously incorporated to improve data relevance and accuracy.

- Governance and Security: Because data often includes sensitive information, DataOps builds data governance, access control, and compliance checks. This ensures that as data pipelines deliver value, they also respect privacy and regulatory requirements (e.g., GDPR). Security in DataOps extends to controlling data access and integrity, whereas DevOps security focuses more on application and infrastructure hardening.

When implemented successfully, DataOps significantly shortens the cycle time from a data need (a question or new data request) to a data success (a helpful, trustworthy answer or dataset).

Comparing Workflows

Both DevOps and DataOps implement pipelines, but the difference lies in what moves through the pipeline. In DevOps, it is the application code and software services, whereas in DataOps, it is the data itself (datasets, analytics, machine learning models derived from data).

DevOps pipeline: For each change, the following cycle repeats rapidly –

Plan → Code → Build → Test → Release → Deploy → Operate → Monitor.

DataOps pipeline: For every new data transformation (like adding a new field in a data model or creating a new report), the following reiterates –

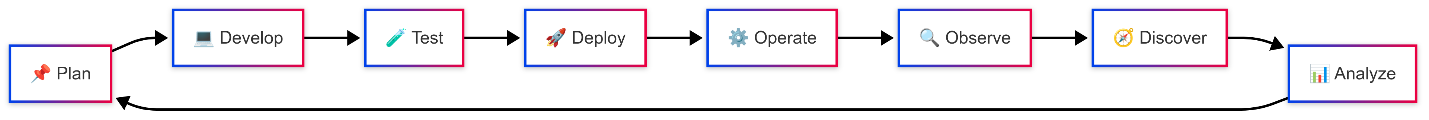

Plan → Develop → Test → Deploy → Operate → Observe → Discover → Analyze.

A key difference in workflows is timing and continuity. DevOps pipelines typically run on discrete code changes. You might deploy application updates daily or weekly. In contrast, DataOps pipelines deal with ever-changing data; they often run continuously or on frequent schedules to process new incoming data.

Overlapping Principles and Shared Practices

Given that DataOps draws inspiration from DevOps, it’s no surprise that they share many core principles. Here are key areas where DevOps and DataOps overlap.

- Automation & CI/CD.

- Collaboration.

- Agile, Iterative Approach.

- Emphasis on Quality (Testing & Monitoring).

- Governance and Security Integration.

Many organizations find that fostering a strong DevOps culture in their software teams creates a template for their data teams to follow. For example, using infrastructure-as-code to provision data pipeline resources or applying continuous testing frameworks for data are extensions of the DevOps mindset into DataOps.

DataOps vs DevOps: Differences

| Aspect | DevOps (Software Focus) | DataOps (Data Focus) |

|---|---|---|

| Primary Focus | Optimizing software development and delivery pipelines. | Optimizing data management and data analytics pipelines. |

| Goal/Objective | Faster, more reliable application releases and updates. | Faster, more reliable data delivery and insights, with high data quality. |

| Core Deliverables | Running software products: applications, services, and infrastructure changes. | Running software products: applications, services, and infrastructure changes. |

| Key Processes | High-quality data products: datasets, analytics reports, ML models, and dashboards. | CI/CD for data – continuous integration of data pipeline code (SQL, ETL scripts), automated data testing, deployment to data environments (e.g., data warehouse or pipeline scheduler). Uses data orchestration tools and data versioning for consistency. |

| Team Composition | Primarily technical roles: Software Developers, IT/ops Engineers, DevOps Engineers, QA, SRE (Site Reliability Engineers). Business stakeholders give input but are usually not hands-on in the pipeline. | CI/CD for code – continuous integration of code changes, automated testing, and deployment to environments. Uses infrastructure as code, containerization for consistency. |

| Toolchain | Software development tools: version control (Git), CI servers (Jenkins, GitLab CI), config management (Ansible), containers (Docker), orchestration (Kubernetes), monitoring (Prometheus, New Relic), etc.. | Data pipeline tools: Data Integration & ETL platforms (Apache NiFi, Talend), workflow orchestrators (Airflow, DataKitchen), analytics engineering tools (dbt), data catalogs, data quality monitoring tools, etc. |

| Examples of Metrics | Pipeline throughput/latency, data freshness, data quality measures (error rates, validation passes), and issue resolution time measure data pipeline performance and reliability. | Cross-functional data roles: Data Engineers, DataOps engineers, data scientists, analysts, data architects, and often includes business domain experts. A mix of technical and domain-focused people collaborate throughout the data lifecycle. |

Case Studies and Examples of DataOps vs DevOps

Let’s have a look at a couple of scenarios where organizations have implemented DevOps and DataOps:

- Netflix uses DevOps for fast, reliable app updates and DataOps to personalize recommendations through real-time data pipelines analyzing user behavior.

- Capital One uses DevOps for secure app deployments and DataOps for real-time fraud detection and risk analytics using automated, governed data pipelines.

It’s not that an organization must choose one over the other; rather, success often comes from using DevOps to create a solid foundation for software and infrastructure and using DataOps to build agile and trustworthy data pipelines on that foundation.

Conclusion

DevOps and DataOps are two sides of the same coin when it comes to enabling digital transformation, but they apply to different layers: DevOps to software, DataOps to data. Both aim to eliminate bottlenecks and improve reliability through automation, iterative development, and collaboration. DevOps ensures that the platforms and applications that process data are delivered and updated quickly and reliably.

DataOps ensures that the data itself is delivered quickly and reliably through those platforms, all the way to the people who need it. In practice, if your goal is efficient, scalable, and reliable data transformation, you should strive to implement both DevOps and DataOps principles. Encourage your software engineers and data engineers to collaborate, perhaps in guilds or joint planning sessions, because improvements in one domain can often benefit the other.

Frequently Asked Questions

1. Will MLOps replace DevOps?

No, MLOps won’t replace DevOps. Instead, it complements it by addressing the unique challenges of deploying and managing machine learning models, while DevOps focuses on traditional software delivery.

2. What is the DataOps lifecycle?

The DataOps lifecycle is a set of practices that automate and streamline data integration, testing, deployment, and monitoring to improve data quality and collaboration across teams. It includes stages like data ingestion, transformation, orchestration, testing, deployment, and continuous monitoring.