First, think of the word “data”. Data is the information we collect in the form of text, numbers, images, etc., coming from different sources like spreadsheets, databases, or the cloud.

Table of Contents

Now, “Orchestration” comes from music. Imagine a conductor leading the orchestra, ensuring that all the musicians play in sync. In tech, orchestration means organizing different processes to work together smoothly.

In today’s world, where it is very important to keep the data and workflows organized so as to work efficiently, data orchestration comes into play. In this blog, you will understand how orchestration works, why it matters, and how it is helpful, even if you are just starting out.

What’s Data Orchestration?

Data Orchestration involves the process of coordinating and automating the collection, transformation, and delivery of data between various different sources and systems. It doesn’t matter where the data comes from, such as internal databases like MySQL, cloud services like Google Cloud Storage or AWS S3, or third-party APIs; orchestration tools help in seamless data integration and processing, maintaining consistency and reducing manual tasks.

Hevo Data, a No-Code Data Pipeline Platform, empowers you to ETL your data from a multitude of sources to Databases, Data Warehouses, or any other destination of your choice in a completely hassle-free & automated manner.

Check out what makes Hevo amazing:

- It has a highly interactive UI, which is easy to use.

- It streamlines your data integration task and allows you to scale horizontally.

- The Hevo team is available round the clock to extend exceptional support to you.

Hevo has been rated 4.7/5 on Capterra. Know more about our 2000+ customers and give us a try.Get Started with Hevo for Free

Get Started with Hevo for FreeWhy is it important?

Data orchestration helps in eliminating silos (a collection of data that is different from other parts of an organization and is not easily accessible), optimizes data flow, and ensures that the company/organization has the correct data in the right situation. This is essential for making business decisions. It also minimizes the resources spent on manual data management.

How does it work?

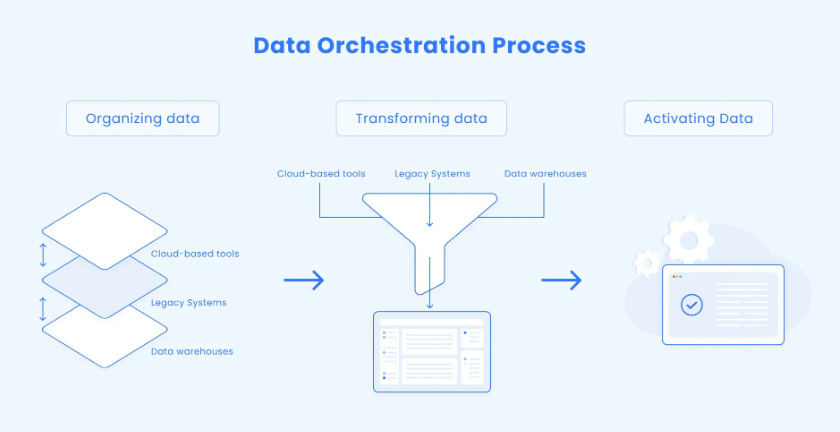

Data orchestration tools perform three main functions:

- Send Data: these tools are used to send the cleaned data where it is needed, for instance report, a dashboard, or another system.

- Collect Data: They collect data from different places like spreadsheets, databases, or even cloud storage.

- Organize Data: They clean the data and organize it in such a way that it is easy to use.

The above picture shows the functions of Data Orchestration Tools: Organizing data from various sources, transforming it into a meaningful format, and delivering it to where it’s needed, ensuring effortless and efficient data management.

In short, data orchestration is different from just moving data because it not only moves data from various sources but also makes it clean, organized, and ready to use to save time, effort, and mistakes. The most popular tools that are used for data orchestration are Apache Airflow, Prefect, and Dagster.

How Does It Stand Apart from Pipelines, ETL, and Workflow Automation?

It is very easy to confuse data orchestration with data pipelines and other data engineering tools. Though they all play their own role in processing the data, they have different purposes and functions.

Let’s dive into it!

Data Orchestration vs. Data Pipelines

- Data pipelines are used as automated routes for moving data from one place to another. Pipelines usually use (an ETL framework), like extracting, transforming, and loading data in a fixed sequence. However, data pipelines mainly focus on making data travel along a predefined path without being flexible in nature.

- Data Orchestration, on the other hand, is flexible and intelligent. It not only moves the data but also coordinates multiple data pipelines and automates complex workflows. For instance, if data pipelines are used to move the sales data of a store from its database to a reporting tool, data orchestration manages several pipelines, ensuring that sales data, inventory data, and marketing data are combined effectively and in the right order.

Data Orchestration vs. ETL Tools

- ETL (Extract, Transform, Load) tools mainly focus on the data transformation process (extracting raw data, transforming it into a format, and loading it into a storage system (e.g. data warehouse).

- Data Orchestration is beyond the ETL. It includes ETL steps but also helps in scheduling coordination with other workflows and triggers additional tasks (like sending a notification if something goes wrong).

To Learn more check out this Blog on Data Orchestration vs ETL Tools.

Data Orchestration vs. WorkFlow Automation Tools

- As the name suggests, WorkFlow automation tools are used for automating repetitive tasks but are limited to some particular platforms and applications.

- Whereas data orchestration is a cross-platform. It coordinates across multiple systems, can manage data coming from various sources and ensures smooth work.

Key Differences Summarized:

| features | Pipelines | ETL | Data Orchestration |

| Main function | Move data from one place to another | Transform data from raw to useful formats | Coordinates multiple workflows and processes |

| Scope | Focus on one task | Perform data transformation only | Manages multiple data pipelines and tasks |

| Flexibility | Fixed sequence | Focus on extraction and transformation | Dynamic workflows |

| Cross-Platform Management | Limited | Limited to specific data types | Integrates and automates across various platforms |

In short, data orchestration acts like an orchestrator of the data tools and manages multiple tasks. It is a big tool that manages the various data sources for multiple uses.

Getting Started With Data Orchestration

If you are just starting out, no need to worry! You don’t need to know everything about the data orchestration right away. It is better to start with small tasks like creating an automated system for gathering and cleaning the data. As you progress with learning, you can start exploring the advanced features as well.

Here’s a simple step for a try:

- Pick one of the sources, like a spreadsheet, and see if you can try to connect it to the data orchestration tool.

- Now, set up a workflow to automate the process of pulling data from your spreadsheet to another place, like a report or dashboard.

Now, in the next section, I will show you how to get started with setting up your first data orchestration using Apache Airflow.

Let’s get started!

Hands-On Practice for Beginners: Get Started with Apache Airflow

Are you ready to try data orchestration?

Now, you are going to start the data orchestration by setting up a simple workflow using Apache Airflow, which is one of the most powerful tools for managing data processes.

In this hands-on practice, the main goal is to:

- Pulling data from different sources

- Send it to the dashboard to the database

Here, it is just a simple beginning code or a prompt that you can use to build data orchestration.

I will take you through each step with easy instructions so that you can get your hands dirty with data orchestration.

Ready? Let’s jump in!

Step 1: Installing Apache Airflow

Before you start, you need to install the Apache Airflow. Docker is a simple way to manage Apache Airflow.

Now, after installing, run the following command in the terminal to start with Apache Airflow.

First, make sure you have Docker installed on your machine. If not, download it from this Docker’s Official Page.

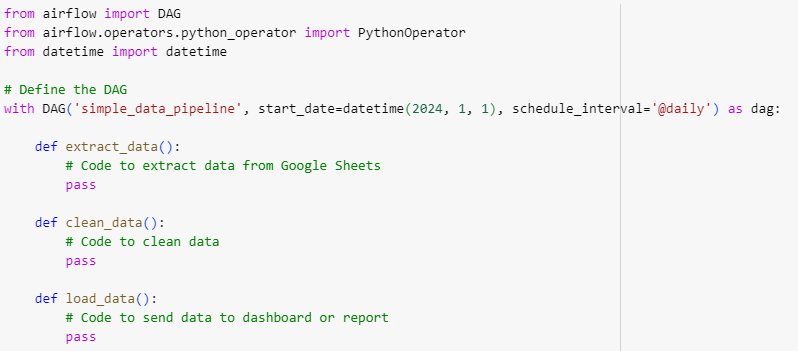

Step 2: Now, you will use Python to define DAG (Directed Acyclic Graph). Each task you want to perform would be in the form of a node of a graph, with arrows indicating the order in which the task gets executed.

In your DAG, the order would be to extract data from Google Sheets, Clean the data, and then Load the data into a reporting tool or dashboard.

You can insert the Python code for extraction, cleaning, and loading the data.

Note: DAG is a core concept in Apache Airflow that represents a workflow. It consists of tasks and their order, where the task is represented by a node, and an arrow represents the sequence of the execution.

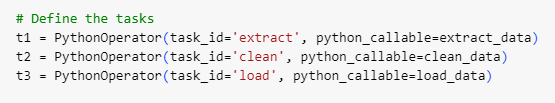

Step 3: After the extraction, cleaning, and loading functions have been completed, you will define the tasks in the DAG.

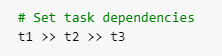

Step 4: You will set the task dependencies as follows:

Here, this line of code defines the order in which the tasks will be executed. The >> symbol indicates that t1 (extraction of data) will be processed before t2 (cleaning of data), and t2 should happen before t3 (loading of data).

By implementing this on your system, you will be able to set up your first data orchestration using Apache Airflow. From extracting to loading, it is one of the flexible tools.

What’s Next?

Start experimenting with more complex workflows by adding additional functions or connecting to other resources and databases.

If you want to take things further, you can try:

- Adding notifications when a task fails (like sending an email or a message).

- Exploring the Airflow’s ability to manage multiple data pipelines at once.

- Try exploring different sources, such as those for cloud storage services (AWS, Google Cloud) or APIs.

Finally, we did it!

In the end, it is good to know how data orchestration is used in real-world problems. Since data is everywhere, this tool is also used in various sectors or industries.

Real World Applications

Data Orchestration is not a technical term; It has a significant impact on various industries. Here are some of the real-world examples:

- E-commerce: In e-commerce, data comes from multiple streams like product inventory, sales, customer data, and marketing. Data orchestration tools help ensure that these streams work together, allowing them to receive real-time updates and better decision-making for marketing campaigns.

- Healthcare: Hospitals gather large amounts of data from patient records, lab results, and medical equipment. Using data orchestration, hospitals or healthcare organizations can automate the flow of data and transform the data into a usable format, helping doctors and researchers have updated information and effective treatments.

- Financial Services: Banks and financial institutes also deal with a lot of data involving transactions across various platforms. Data orchestration helps them to manage that data from different platforms to systems. This really helps banks or financial institutes create accurate reports and supports fraud detection.

- Manufacturing: Manufacturers also rely on data from different sources, such as chain data, quality reports, and many more. By using data orchestration, they can optimize efficient production and reduce the time period.

Conclusion

Data orchestration is the process of managing data across various sources, making sure that the organization/company can access it easily and use it to make better and more informed decisions. This process is very time efficient and helps to work on data faster based on tasks. By automating workflows, improving data integration methods, and providing real-time access to data, businesses can use data more effectively and efficiently.

I hope you enjoyed learning about data orchestration and how it can make things simpler for us! By making data more accessible, organized, and automated can make businesses work smarter, not harder. Give orchestration a try, and you will be amazed to see the difference it creates!

If you are looking to elevate your data management needs, you should consider using Hevo Data to migrate your data from various sources to your preferred destination. Hevo is a no-code data pipeline platform that not only transfers your data but also enriches it. Sign up for Hevo’s 14-day free trial and experience seamless data migration.

To Learn more about Data Management check out:

FAQs

1. What is data ingestion?

Data Ingestion is a process of collecting data from various sources (like website APIs) into storage systems, such as data warehouses, data lakes, or databases.

2. What is data orchestration?

Data orchestration not only pulls data from various sources but also helps to process the data, transfer it, and load it into different systems. It helps coordinate with different sources and automates the system. So, you can say that data orchestration is a broader term that goes beyond one task.

3. What is the difference between data management and data orchestration?

As of now, you are familiar with the work of data orchestration. So, You can say that data orchestration is one part of data management. Data management not only works with the collection and usage of data but also covers governance, storage, and security.