In modern society, every organization uses data to make various decisions in the process of business operations. But how can businesses be confident that they possess clear, correct, consistent, and comprehensive data? This is where data quality and data observability play their part. While these two terms are used interchangeably, they refer to distinct entities that deal with and enhance the data handling inputs. A proper grasp of the distinctions between data quality and data observability can enable organizations to create effective data management frameworks.

Table of Contents

In this blog, we’ll explore Data Observability vs Data Quality, how they differ, and how both can be effectively implemented together

What is Data Quality?

Data quality on the other hand represents a measure of how effectively a given set of data is suited for use in an organization. In other words, data from higher quality is more accurate and valuable than data from lower quality. It is a common practice for organizations to strive for increasing the quality of the data gathered in order to make more accurate decisions.

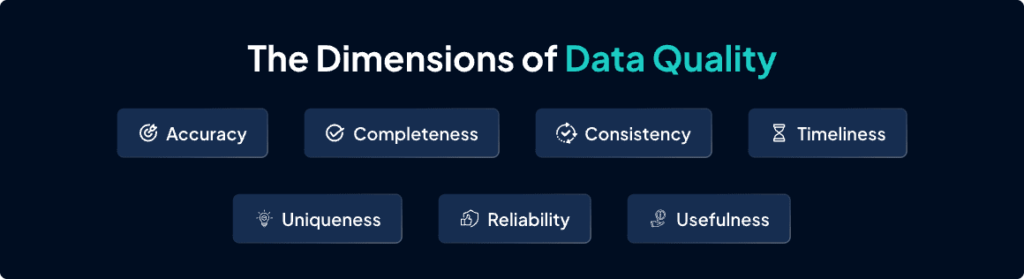

There are the main dimensions that organizations use to assess data quality:

Key Components of Data Quality

- Accuracy – This looks at how many errors are in the data. Accuracy can still be measured in the same way as before, by comparing this dataset with a reference one.

- Completeness – This determines if all relevant data fields have been completed. To assess this, you work out the proportion of the records that miss some data.

- Consistency – It guarantees that data retrieved from several databases correspond. If not, the fault may lie in either one or multiple datasets suffering from mistakes.

- Timeliness – This evaluates whether or not the data is current. That is why more recent data is usually more reliable and up to date.

- Uniqueness –This looks for duplicates in a record set. Combining the data or avoiding data redundancy guarantees a higher quality of data.

- Reliability –The results obtained have to be accurate and consistent through various time intervals.

- Usefulness – The data must be useful and appropriate when used in a particular context, however it was gathered.

These are the doomed results of poor data quality, which IBM attributes to $ 3.1 trillion lost by businesses in the United States. Inaccurate customer information and problems with duplicates impede effective work and hinder the achievement of goals. According to the Experian study, 77% of companies believe that data quality has a negative impact on their profitability. Always, it becomes very important to establish how the data to be collected must be of the highest quality so as to make the operations smooth and successful.

Achieve optimal data quality and observability effortlessly with Hevo’s no-code ETL platform. Hevo streamlines data integration, allowing your team to focus on deriving actionable insights rather than managing complex pipelines. With Hevo, you can:

- Connect to 150+ plug-and-play integrations effortlessly

- Ensure seamless syncing of historical data without manual intervention

- Automate column mapping from source to destination for accurate data delivery

Choose Hevo for a seamless experience and know why Industry leaders like Meesho say- “Bringing in Hevo was a boon.”

Get Started with Hevo for FreeWhat is Data Observability?

Data observability is the assessment of the health and functionality of data systems and the systems that comprise them. It follows the path of data from when it is created through to when it is utilized, and provides organizations with information concerning data quality and effectiveness. Data observability is different from regular monitoring where the goal is to understand, solve or prevent problems from happening again.

Data observability goes beyond data quality by not just describing a data-related problem but attempting to resolve the problem—and prevent the problem from recurring in the future. With data observability, an organization can better identify its most critical sets of data, users of that data, and problems arising from that data.

Key Components of Data Observability

- Monitoring: Data observability includes constant supervision of data pipelines, data changes, and data flows. It captures data lineage, data dependency, and data transformation in real time.

- Alerting: Data observability tools and systems automatically trigger notifications when it encounters data problems. These alerts assist the data engineers and data operators to give prompt responses to those problems.

- Visibility: Data flow mapping helps organizations gain insights into the different aspects of data in their distributed landscape, across the different stages of their IT infrastructure. It facilitates debugging and performance enhancing as well.

How Are Data Quality and Data Observability Related?

Data quality and data observability comprise a solid foundation for guaranteeing the accuracy, relevance, and efficiency of data projects in an organization. Understanding how these two concepts work is helpful in the challenging and dynamic data world that we face today.

These are key factors where data quality and data observability work together effectively:

- Shared Focus on Accuracy: Both data quality and data observability defined the importance of reliable data. Data quality remains both complete and accurate throughout its lifecycle and data observability tracks the data in real time to enforce this accuracy.

- Quality monitoring in real-time: It commonly happens that traditional data quality assessments are based on batch processes, thus, they may miss urgent problems. Data observability hence fills this gap through offering real-time monitoring, which makes it possible for organizations to identify variances as they happen before they grow out of proportion.

- Proactive Issue Detection: Data observability has some features to detect problems before they emerge. that will help to achieve the goals of data quality. Catching these irregularities before they go too far helps organizations protect the data’s integrity so that it remains a valid resource for decision-makers.

- A root cause analysis and data integrity process: While employing a similar set of principles, data quality, and data observability include root cause analysis as one of the fundamental procedures. Consequently, in the quality of the data domain, root cause analysis defines the causes of inconsistency or inaccuracy. Root Cause Analysis in Data observability helps identify the sources of the breaks, enabling the preservation of data integrity in data streams and processes.

Data Quality vs Data Observability: Key Differences

In the world of data management, two prominent concepts – data observability and data quality have different characteristics that contribute to the focus, objective, and detection of the information.

- Focus: Data quality maintains the integrity and validity of the data content, while data observability is more interested to the wellness and functionality of the data handling gadgets.

- Objective: Data quality looks to give decision-makers the best data, while data observability tries to identify problems in data systems so they can be solved before they become an issue for data quality.

- Detection: While data quality issues are often discovered when complications arise, data observability entails addressing possible complications as they happen.

Data Observability vs Data Quality

The following table provides a breakdown that helps explain the concepts of data observability as well as data quality. These are fundamental areas of difference that must be appreciated to allow organizations to get the best from their data plans and processes.

| Criteria | Data Observability | Data Quality |

| Focus | Real-time monitoring of data pipelines and system | Ensuring the intrinsic attributes of data (e.g., accuracy, completeness) |

| Objective | Proactively detect, troubleshoot, and prevent anomalies | Maintain and improve the reliability, accuracy, and consistency of data |

| Execution Timing | Continuous monitoring throughout the data lifecycle | Applied during data profiling, validation, and transformation processes |

| Methodology | Monitoring data systems to gain real-time insights | Assessing and improving data attributes like accuracy, completeness, and timeliness |

| Primary Benefit | Early detection of data issues to prevent disruptions | Ensures data is reliable, trustworthy, and fit for purpose |

| Scope | Covers the entire data pipeline, including systems and workflows | Focuses specifically on the attributes of individual datasets |

| Impact | Reduces operational and decision-making disruptions by identifying issues early | Helps ensure accurate analysis, informed decisions, and insights based on high-quality data |

| End Goal | Improve system reliability, data pipeline health, and prevent recurring issues | Maintain the inherent quality of data, ensuring it meets business needs |

| Dynamic Nature | Real-time analysis and insights into the behavior of data and systems | Primarily focused on static attributes of data that are measured periodically |

How to implement data quality and observability in your organization?

Ensuring data quality and observability and its practices must follow an organized process where one needs to fit skills, analytics, and other initiatives into a company’s structure.

Below is a comprehensive guide outlining the steps to successfully implement data quality and observability initiatives:

Step 1: Align Goals and Metrics

- Understand Business Goals: First, orientate data quality and observability in regard to the enterprise’s goals and purposes. Find out how improved data management contributes to the decision-making process and shop floor performance.

- Identify Critical Data: Pay special attention to the key data in your organization’s operations.

- Set KPIs: Identify goals and targets to assess achievement that should be turned into measurable performance indicators.

Step 2: Build the Right Infrastructure

- Choose the Right Tools: Choose the tools for data quality and observability that integrate with your current solutions. These ought to include profiling, validation, and monitoring.

- Integrate with Existing Systems: Implement data quality audit and real-time monitoring right into your working processes.

Step 3: Data Profiling and Cleansing

- Profile Data: Check over data as closely as possible in order to identify any error or inconsistencies, or gaps. It is at this step where one can get a good perspective resulting from the current state of the data and any problems that might be experienced.

- Cleansing Processes: It is necessary to design automated procedures that help in the case of data quality problems, meaning the errors should be corrected and the occurrences of duality be eliminated.

Step 4: Real-Time Monitoring and Alerts

- Establishing data health metrics: The first key metric that should point to data health and quality should be accuracy, completeness, and consistency measurements.

- Implementing continuous monitoring: Develop monitoring solutions that will update the status of pipelines and systems accepting data to show any variations or problems that may occur.

- Setting up automatic notifications: Design alerted scenarios that trigger when data abnormalities or issues on quality surface to prevent slowness and poor problem handling.

Step 5: Forming Collaboration and Training

- Create Cross-Functional Teams: Engage data engineers, data analysts, and the consumption community for fixing and viewing data quality and data observability.

- Offer Training: Educate and train the members of the team, in order to encourage the usage and analysis of data.

- Ensure Clear Communication: Promoting the use of reporting systems to report problems and escalate ideas to the team.

Step 6: Continuous Improvement

- Frequent data assessments: Schedule periodic data audits to assess the effectiveness of your data quality and observability strategies. The results hold important implications for augmenting your tactics and processes.

- Ongoing optimization: Always revisit and modify organizational data quality and observability methods depending on new knowledge and changes in organizational requirements. Shift your strategy to address new data issues to embrace.

- Integrating stakeholder feedback: Proactively gather and integrate feedback from different teams to enhance your data efforts. This way, making sure that you are collaborating on the correct methods to manage your data assists in maintaining your processes’ efficiency and applicability.

Conclusion

In conclusion, data quality and data observability are crucial for organizations that hope for data perfection. Acquisition of high-quality data includes aspects such as accuracy, completeness, and consistency, hence reducing data variance. On the other hand, data observability can help organizations get real-time visibility of data systems, allowing them to manage data flow and respond to any contingencies.

If businesses are to have a framework that will guarantee high-quality data and, at the same time, observe and solve issues that may affect data quality, then there is a need to adopt both data quality and observability. The two approaches are beneficial in that they enable organizations to respond quickly to issues relating to data so that their data continues to be credible and useful.

Lastly, integrating data quality and observability strategies allows organizations to achieve decision-making with confidence safely in the context of a shifting data environment to generate more optimized and higher-performance results. Regarding these concepts, the interest indicates the aspiration of companies to optimize their data-driven activities and guarantees the continuous advancement and improvement of organizational results.

Sign up for a 14-day free trial and simplify your data integration process. Check out the pricing details to understand which plan fulfills all your business needs.

FAQs

1. What is the difference between data observability and data quality?

Data quality ensures that collected data is accurate and consistent for decision-making, while data observability monitors data systems in real-time to identify and address issues, ultimately enhancing overall data quality.

2. What are the 5 V’s of data quality?

The 5 V’s of data quality include:

Volume – the amount of data generated.

Variety – the different types of data.

Velocity – the speed of data generation.

Veracity – the accuracy of data.

Value – the usefulness for decision-making.

3. What are the four pillars of data observability?

The four pillars of data observability include:

Monitoring – overseeing system flow to tackle real-time issues.

Tracing – mapping data’s journey during processing.

Alerting – notifying when data issues arise for immediate action.

Data Lineage – tracking data movement to understand its source and dependencies.